Quality In Software

Usability

Usability has evolved into user experience with a product. Usability practitioners and researchers has been to establish a set of methods that facilitate the creation of superior user experiences-i.e., products that are useful, usable, and desirable. The field now has a broad collection of methods, and there is common agreement as to when particular methods are useful. Usability is integrated into companies' standard development processes with expectations of scheduled deliverables going both ways-prototypes delivered for evaluation, and usability findings delivered to development.

There are new methods or approaches that will enhance the field of usability.

- new methods that will enable us to evaluate products in contexts that we couldn't serve well before;

- new contexts, where usability is applied in different development environments

- real-word impacts, where the authors look at the broader milieu in which usability lives, and at its impact outside the development organization.

Model-generated user interfaces hold the promise of producing platform-independent systems and making development more efficient.

The automatic generation tools produced systems that could be used by experienced users but had numerous problems (e.g., convoluted task flows, unlabeled fields, meaningless error messages) that were identified in both usability tests and heuristic evaluations.

The following should be considered to improve usability:

- Quality In Software

- Quality In Interaction

- Quality In Value

Quality In Software

Usability Evaluation of User Interfaces

It can be generated with a Model-Driven Architecture Tool.

MDA(Model-Driven Architecture)

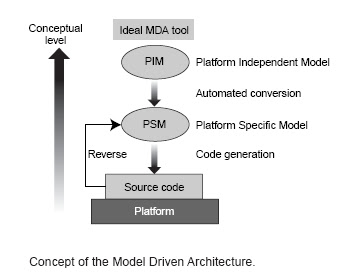

MDA is a software design approach launched by the OMG (Object Management Group) in 2001, to solve the problems of non-automated and non-standardized software manufacturing and support the development of large, complex, interactive software applications providing a standardized architecture with the following features.

- Interactive applications can easily evolve to address constantly evolving user requirements

- Old, current, and new technologies can be harmonized

- Business logic can be maintained constantly or can evolve independently of technological changes, or of the rest of the interactive application

- Legacy systems can be integrated and unified with new systems

Using the MDA methodology, system functionality may first be defined as a platform-independent model (PIM) through an appropriate Domain Specific Language. Given a Platform Definition Model (PDM) corresponding to CORBA, .Net, the Web, etc., the PIM may then be translated to one or more platform-specific models (PSMs) for the actual implementation, using different Domain Specific Languages, or a General Purpose Language like Java, C#, Python, etc.

The MDA model is related to multiple standards, such as the Unified Modeling Language (UML). Note that the term “architecture” in Model-driven architecture does not refer to the architecture of the system being modeled, but rather to the architecture of the various standards and model forms that serve as the technology basis for MDA.

There are some MDS-compliant methods for developing user interfaces. The goal is to investigate whether MDS-compliant methods improve softeware usability through model transformations. To accomplish this, two usability evaluations are conducted in the code model (final user interface). Results showed that the usability problems identified at this level provide valuable feedback on the improvement of platform-independent models(PIMS) and platform-speciffic models(PSMP) supporting the notion of usability produced by construction.

Integrating Usability into a MDA Process

The usability of an interactive application obtained from a transformation

process can be evaluated at several stages of a MDA process:(i) in the CIM, by evaluating the requirements models (i.e., Use Cases), task models

or domain models which represent the user requirements and tasks; (ii) in the PIM, by evaluating the models that represent the abstract user interface such as presentation models or dialogue models; (iii) in the PSM, by evaluating the concrete interface models (if they exist); and (iv) in the CM, by evaluating the final user interface.

The following figure shows the correspondences between the models obtained in a generic MDA process and the usability evaluation activities.

An Overview of the Model-Driven Method based on !OlivaNova Tool

!OlivaNova is a Programming Machine Tool adhering to MDA principles. It automatically generates an entire information system (including its user interface) based on conceptual models.%BR%

This method has been organized in three views: the Computation Independent Model (CIM), the Platform Independent Model (PIM) and the Platform Specific Model (PSM).

To support the conceptual modeling of user interfaces, the conceptual model has called Just-UI, which decomposes the user interaction in three main levels:

- Level1: HAT (Hierarchy of Action Tree) - organize the semantic functions that will be presented to the different users who access the system.

- Level2: IUs (Interaction Units) - represents abstract interface unit with which the users will interact to carry out their tasks.

- Level3: Elementary Patterns - are the primitive building blocks of the user interface and allow the restriction of the behavior of the different investigation units.%BR%

A model complier that implements a set of mapping between conceptual primitives (PIM) and their software representations in the code model automates the development process. The !OlivaNOva tool provides model compliers that automatically transform the conceptual model into a full interactive application for the following target computing platforms; Visual basic, C#, ASP and NET, Cold Fusion and JSP using SQL Server 2000, Oracle, or DB2 as repository.

Experimental Objects

The chosen application is the information management system of !Adb(Aguas del Bullent S.A.), a water supply service company located in Oliva, Spain. !AdB supports management for clients, orders, invoices, etc. And it's a Microsoft-certified application and that it was completely generated form the conceptual model without any manual -based activity. %BR%

Two representative tasks for conducting the UEMs (Usability Evaluation Methods). %BR%

These tasks were selected through a questionnaire for the !AdB application users to identify the most commonly used and representative tasks.

- Create a subscriber

- Create a product in stock

These are descriptions of both tasks in different Levels According to the MDA Approach

Task Description in the CIM (Computation Independent Model)

The task model representing the end user’s viewpoint of the task ‘create a subscriber’. This is a complex domain-dependent task.

Task Description in the PIM (Platform-Independent Model)

At this level, the tasks to be performed are described as abstract user interfaces using the Presentation Model.

Task Description in the CM (Code Model)

The model compilation shown in the process defines an application model that is equivalent to the Platform-Specific Model in MDA. It corresponds to the final user interface that allows the tasks to be described by explaining the navigation between windows and the expected interaction between the user and the system.

Usability Evaluation without Users

This kind of

evaluation is useful for identifying usability problems and improving the usability of an interface by checking it against a standard. In this study, the results of applying the Action Analysis method (Olson and Olson 1990) based on the recommendations of (Holzinger 2005) is used as standard.

Keystroke-Level analysis

To complete each task in order to predict tasks performance times, the user must perform the two selected tasks into a sequence of actions that we decomposed. To do this, a time is associated to each one of these actions or sequence of operators (physical or mental), and they are then totaled.

Analysis and Interpretation of Results

Even though the action analysis method is mainly used to predict task time, it can also highlight other UPs. One issue revealed by the analysis

was that the task 1 might take too long since it is a complex task.

Usability Evaluation with Users

Effectiveness relates the goals of using the product to the accuracy and completeness with which these goals can be achieved. It was measured using the following measures proposed in the Common Industry Format.

Procedure and Instrumentation

The design of the test followed a logical sequence of events for each user and across tests. The testing procedure was as follows:

- Users were given information about the goals and objectives of the test.

- Users were asked to fill in a demographic questionnaire prior to the testing.

- Users were then given a series of clear instructions that were specific for the test.

- Users were asked to complete the two tasks. To avoid a possible ceiling effect, there was no time limit to complete the tasks.

- Users were then asked to complete a QUIS (Questionnaire for User Interaction Satisfaction - www.cs.umd.edu/hcil/quis/) after completing the

last task. This questionnaire is arranged in a hierarchical format and contains: (1) a demographic questionnaire, (2) six scales that measure

overall reaction ratings of the system, and (3) four measures of specific interface factors: screen factors, terminology and system feedback,

learning factors and system capabilities.

- Users were given a small reward for their participation.

Operation and Data Collection

We also followed a strict protocol for the usability testing to ensure consistency of the data collected.

The test user was expected to accomplish the two tasks without being assisted. In any case, if the user got stuck on one task and felt unable to continue, he/she could ask for help; the instructions given to the users clearly stated that they should only ask for assistance as a last resort. When a user asked for assistance, the task being tested was marked as assisted. Therefore, that instance of the task was compute in the assisted completion rate variable and not in the unassisted completion rate variable.

Analysis and Interpretation of Results

- Characteristics of users

- Effectiveness and Efficiency measures

- Descriptive statistics for perception-based variables

Correlation between Results

Spearman’s rank order correlation analysis can be performed to assess the relationships that exist among usability measures.

- Unassisted Task Completion Rate(UTCR) and task time are significantly negatively correlated (r=0.910).

- Usability Evaluation of UIs Generated with a Model-Driven Architecture Tool

This indicates as expected that when task time decreases, UTCR increases.

Similarly, UTCR are significantly negatively correlated with assists(r=0.877). There is also a significant positive correlation between task time

and assists (r=0.898). As the assists increase, the task time also increases. Exceptions are the perception-based variables, where most of the correlations are not significant at the 95% confidence level. The exception are screen factors that have a positive correlation with task time (r=0.548). %BR%

The aforementioned aspects of usability are not well understood for complex tasks (Frøkjær et al. 2000). Frøkjær’s study also suggests that a weak correlation between usability measures is to be expected and that efficiency, effectiveness, and satisfaction should be considered independent aspects of

Usability Problems and their Implications to the PIM and PSM

- User workload

- No feedback for user selection

- Lack of descriptive labels

- Difficult navigation

- Inflexible/invalid search capalities

- Inconsistent menu items and window labels.

- Lack of descriptive labels in some fields

- Meaningless error messages.

Correspondences between the Presentation Model, the Transformation Engine Component, and the Final User Interface

Conclusion and Perspectives

The evaluation without users revealed UPs that are more proceduraloriented while the evaluation with users revealed problems that are more

perceptual and cognitive-oriented.

If we can maintain a proof of the correctness of a software from its inception until its delivery, it would mean that we can prove that it is correct by construction. Similarly, if we can maintain a proof of the usability of a user interface from its specifications until the final code, it would mean that we can prove that it is usable by construction.

When defects are introduced and then removed in a development method that does not rely on correctness.

Software Quality

One of the most renowned classification of quality is a multi-perspective view proposed by Kitchenham and Pfleeger:

- The transcendental perspective deals with the metaphysical aspect of quality

- The user perspective is concerned with the appropriateness of the product for a given context of use

- The manufacturing perspective represents quality as conformance to requirements

- The product perspective implies that quality can be appreciated by measuring the inherent characteristics of the product

- The value-based perspective recognizes different importance (or value) of quality to various stakeholders

CMM (Capability Maturity Model) / CMMI (Capability Maturity Model Integration)

CMM emerged in 1990 as a result of the research effort conducted by specialists from SEI (Software Engineering Institute) of Carnegie Mellon University. Its next version, CMMI, known in the industry as a best practices model, is mostly used to "provide guidance for an organization to improve its processes and ability to manage development, acquisition, and maintenance of products and services."

SPICE (Software Process Improvement and Capability dEtermination

SPICE is an international initiative to support the development of an International Standard for Software Process Assessment. It provides framework for the assessment of processes. Proces assessment examiners the processes used by an organization to determaine whether it is effective in achieving its goals.

- Part 1 - Concepts and vocabulary

- Part 2 - Performing an assessment

- Part 3 - Guidance on performing an assessment

- Part 4 - Guidance on use for process improvement and process capability determination

- Part 5 - An examplar Process Assessment Model

SWEBOK (Software Engineering Body of Knowledge)

The purpose of the SWEBOK is "to provide a consensually-validated characterization of bounds of the software engineering discipline and to provide a topical access to the Body of Knowledge supporting that discipline."

In SWEBOK, the software quality subject is decomposed in individual topics grouped in four sections:

- SQC (Software Quality Concepts)

- Purpose and Planning of SQA and V&V (Verification and ValidationV)

- Activities and techniques for SQA and V&V

- Other SQA and V&V Testing

Basic Concepts in Software Quality Engineering

*Engineering* is the profession in which a knowledge of the mathematical and natural sciences, gained by study, experience, and practice, is applied with judgment to devolop ways to utilize, economically, the materials and forces of nature for the benefit of mankind.%BR%

*The application of engineering to quality of software* is the application of a continuous, systematic, disciplined, quantifiable approach to the development and maintenance of quality throughout the whole life cycle of software products and systems.

Quality Models

The quality model can be used to predict the overall quality .

Quality in use

The quality in use model is a two-layer model composed of characteristics and quality measures.

Internal and External Quality Model

External Quality Requirements represents end-user concerns and can be clearly expressed by a stakeholder in the business vision phase.

To identify internal quality requirements, these requirements must be sought either through deduction from external quality requirements or further in the development cycle when more technical information is available.

It is a three-layer model composed of quality characteristics and subcharacteristics with more than 200 associated measures.

Quality Measurement

- Effective and precise communication

- Means to control and supervise projects

- QUick identification of problems and their eventual resolutions

- Taking complex decisions based on data not on guesses

- Rational justification of decisions

Benefits of measuring in software engineering.

Quality Evaluation

The ultimate purpose of an evaluaton is to obtain reliable information, allowing us to make a wise and justified decision in an actual situation (or stage) of the development process.

The essential parts of the software quality evaluation process are:

- The quality model

- The method of evaluation

- Software measurement

- Supporting tools

Quality Requirements

Requirements are the external specification of specific needs that a product is expected to satisfy.Identifying quality requirements that can be elicited, formalized and further evaluated in each phase of full software product lifecycle became a crucial task in the process of building a high quality software product.

Operational Quality Requirements

If the software product to be developed is planned for a large population of users, this category is valid, and the analyst may seek more details to identify related requirements.

Qality in Use Requirements

Because this category represents purely application context-oriented quality requirements, most quality in use requirements could and should be identified in this phase

Software Quality

To evaluating the maturity of a software development organization, sophisticated methods and models like CCMI, SPICE, or ISO9000, can be applied. But it may not entirely reflect reality.

SQIM (Software Quality Implementation Model)

Basic Hyphthesis

- Engineering quality into a software product is an effort that should be conducted throughout the whole life cycle of software development.

- Because the process of quality engineering is similar in may ways to development process, it seems appropriate that it follows similar rules and applies similar structures.

- Because several software development process models exist, SQIM adheres to the most widely recognized and accepted.

- The quality model that SQIM adheres to is the one that is widely accepted and recognized.

Each phase of SQIM has its own set of activities and subprocesses that should be observed by a quality engineer in his or her daily practice.

Each phase of SQIM associated with its counterpart in the prototyping model will have to run through the same loops of verifying and validating before the obtained status will allow you to move to the next phase.

Model CTM (Consolidated Traceability Model)

Jointly applying SQIM, ISO/IEC9126, and CQL allowed for creating a complementary model dedicated to quality traceability, with every link form lowest level quality measure traceable to quality requirements. merging two models and adding intermediate influence layer between the two parts gives a CTM allowing for building complete functionality.quality traceability matrix.

The moel incorporates both technological and procedual structures that help to identify the correspondence between the stages of development, related by-products associated quality elements, and requirements of all types.

Anaylsis and Evaluation

Modeling can be an appropriate evaluation technique. The modelling challenge is to develop a model at an appropriate level of detail to provide a basis for expressing properties, and thereby generating appropriate sequences.%BR%

The Prototyping issue is to be able to produce systems quickly enough to explore the role that the system will play. The mapping between models and prototypes- it concerns how to maintain an agile approach to the development of prototypes, while at the same time providing the means to explore early versions of the system using formal models.

The class of models required to analyze the range of requirements that would be relevant to ambient and mobile systems

how to ensure practical consistency between them and to avoide bias and inappropriate focus as a result of modelling simplifications.

Agile Software Development (ASD)

In the late 1990’s several methodologies began to get increasing public attention. Each had a different combination of old ideas, new ideas, and transmuted old ideas. But they all emphasized close collaboration between the programmer team and business experts; face-to-face communication (as more efficient than written documentation); frequent delivery of new deployable business value; tight, self-organizing teams; and ways to craft the code and the team such that the inevitable requirements churn was not a crisis.

The Agile Software Development Lifecycle (SDLC)

- Iteration 0 - the first week. Initiate the project by garnering initial support and funding for the project.

- Development phase - Deliver high-quality working software that meets the changing needs of stakeholders.

- End Game phase - Do final testing of the system for system transition into production.

- Production - Keep systems useful and productive.

Modeling on an Agile Project

- Requirements modeling - The goal is to get a good gut feel for what the project is all about.

- Architectural modeling - The goal of the initial architecture modeling effort is to try to identify an architecture that has a good chance of working.

Usability Evaluation of Multimodal Systems

A specific aspect of multimodal user interfaces is that interaction techniques, input/output devices and sensory channels are closed related.

UEM (Usability Evaluation Methods)

- User Testing

* Thinking aloud protocol

* Wizard of Oz

* Log file analysis

* Field observation

- Inspection

* Cognitive walkthrough

* Heuristic Evaluation

* guidelines Review

- Inquiry

* Qustionnaires (satisfaction, preferences, etc.)

* Questionnaires (cognitive workload, Nasa TLX)

- Analytical Modeling

* Model-based analysis

The goal of usability test is to identify major usability problems within the interface.

Standard Usability Evaluation is typically performed in a laboratory, where users are asked to perform selected tasks. Testing multimodal interactions usually requires an additional activity corresponding to the presentation of input and output devices to the user.

Cognitive walkthrough are rarely used in usability testing of multimodal user interfaces. Important when using the cognitive walkthrough in the evaluation of multimodal interfaces is the adoption of guidelines to define the questions in the evaluation form.

Model-based Evaluation

Model-based evaluation helps support standard usability evaluation methods to overcome their reported weakness when testing multimodal interfaces. It can be easily conducted in combination with a usability test or a cognitive walkthrough.

My Original Doc :

https://wiki.dev.hostway/bin/view/Main/Usability

1 Comments:

This comment has been removed by a blog administrator.

Post a Comment

<< Home